Showing

- docs/assets/augmentations/document_shearx.png 0 additions, 0 deletionsdocs/assets/augmentations/document_shearx.png

- docs/assets/augmentations/line_color_jitter.png 0 additions, 0 deletionsdocs/assets/augmentations/line_color_jitter.png

- docs/assets/augmentations/line_downscale.png 0 additions, 0 deletionsdocs/assets/augmentations/line_downscale.png

- docs/assets/augmentations/line_dropout.png 0 additions, 0 deletionsdocs/assets/augmentations/line_dropout.png

- docs/assets/augmentations/line_elastic.png 0 additions, 0 deletionsdocs/assets/augmentations/line_elastic.png

- docs/assets/augmentations/line_erosion_dilation.png 0 additions, 0 deletionsdocs/assets/augmentations/line_erosion_dilation.png

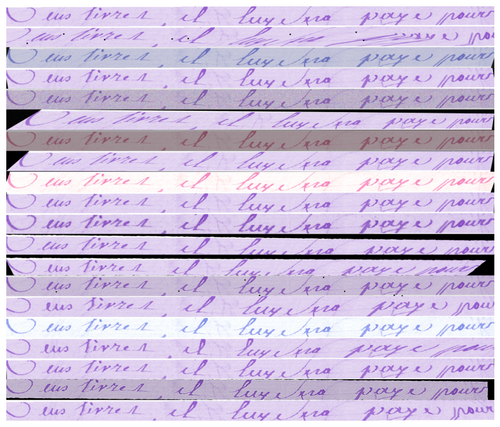

- docs/assets/augmentations/line_full_pipeline.png 0 additions, 0 deletionsdocs/assets/augmentations/line_full_pipeline.png

- docs/assets/augmentations/line_gaussian_blur.png 0 additions, 0 deletionsdocs/assets/augmentations/line_gaussian_blur.png

- docs/assets/augmentations/line_gaussian_noise.png 0 additions, 0 deletionsdocs/assets/augmentations/line_gaussian_noise.png

- docs/assets/augmentations/line_grayscale.png 0 additions, 0 deletionsdocs/assets/augmentations/line_grayscale.png

- docs/assets/augmentations/line_perspective.png 0 additions, 0 deletionsdocs/assets/augmentations/line_perspective.png

- docs/assets/augmentations/line_piecewise.png 0 additions, 0 deletionsdocs/assets/augmentations/line_piecewise.png

- docs/assets/augmentations/line_sharpen.png 0 additions, 0 deletionsdocs/assets/augmentations/line_sharpen.png

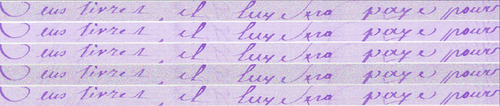

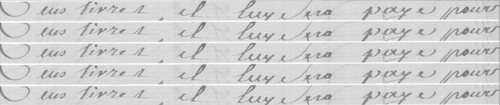

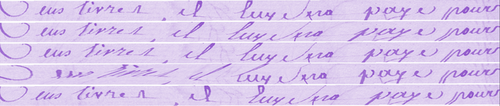

- docs/assets/augmentations/line_shearx.png 0 additions, 0 deletionsdocs/assets/augmentations/line_shearx.png

- docs/usage/train/augmentation.md 137 additions, 0 deletionsdocs/usage/train/augmentation.md

- docs/usage/train/index.md 1 addition, 0 deletionsdocs/usage/train/index.md

- docs/usage/train/parameters.md 117 additions, 141 deletionsdocs/usage/train/parameters.md

- mkdocs.yml 1 addition, 0 deletionsmkdocs.yml

- requirements.txt 1 addition, 0 deletionsrequirements.txt

- tests/conftest.py 5 additions, 4 deletionstests/conftest.py

374 KiB

85.9 KiB

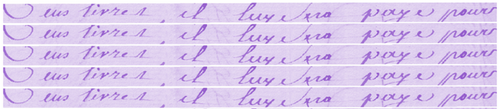

docs/assets/augmentations/line_downscale.png

0 → 100644

86.9 KiB

docs/assets/augmentations/line_dropout.png

0 → 100644

83.5 KiB

docs/assets/augmentations/line_elastic.png

0 → 100644

113 KiB

90.2 KiB

329 KiB

86.1 KiB

105 KiB

docs/assets/augmentations/line_grayscale.png

0 → 100644

30 KiB

82 KiB

docs/assets/augmentations/line_piecewise.png

0 → 100644

86.9 KiB

docs/assets/augmentations/line_sharpen.png

0 → 100644

90 KiB

docs/assets/augmentations/line_shearx.png

0 → 100644

84.6 KiB

docs/usage/train/augmentation.md

0 → 100644

| albumentations==1.3.1 | ||

| arkindex-export==0.1.3 | ||

| boto3==1.26.124 | ||

| editdistance==0.6.2 | ||

| ... | ... |