-

- Downloads

No more DPI adjusting

parent

620e8856

No related branches found

No related tags found

Showing

- dan/ocr/transforms.py 21 additions, 32 deletionsdan/ocr/transforms.py

- docs/assets/augmentations/document_original.png 0 additions, 0 deletionsdocs/assets/augmentations/document_original.png

- docs/assets/augmentations/document_random_scale.png 0 additions, 0 deletionsdocs/assets/augmentations/document_random_scale.png

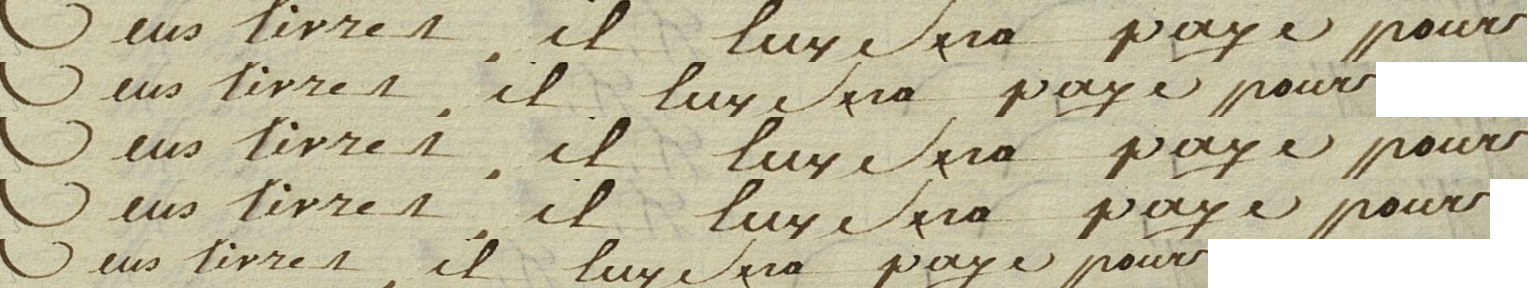

- docs/assets/augmentations/line_full_pipeline_2.png 0 additions, 0 deletionsdocs/assets/augmentations/line_full_pipeline_2.png

- docs/assets/augmentations/line_original.png 0 additions, 0 deletionsdocs/assets/augmentations/line_original.png

- docs/assets/augmentations/line_random_scale.png 0 additions, 0 deletionsdocs/assets/augmentations/line_random_scale.png

- docs/usage/train/augmentation.md 8 additions, 6 deletionsdocs/usage/train/augmentation.md

- docs/usage/train/config.md 2 additions, 1 deletiondocs/usage/train/config.md

- tests/data/training/models/best_0.pt 1 addition, 1 deletiontests/data/training/models/best_0.pt

- tests/data/training/models/last_3.pt 1 addition, 1 deletiontests/data/training/models/last_3.pt

213 KiB

748 KiB

204 KiB

docs/assets/augmentations/line_original.png

0 → 100644

21.7 KiB

628 KiB

Source diff could not be displayed: it is stored in LFS. Options to address this: view the blob.

Source diff could not be displayed: it is stored in LFS. Options to address this: view the blob.