Do not normalize tensor for attention map visualization

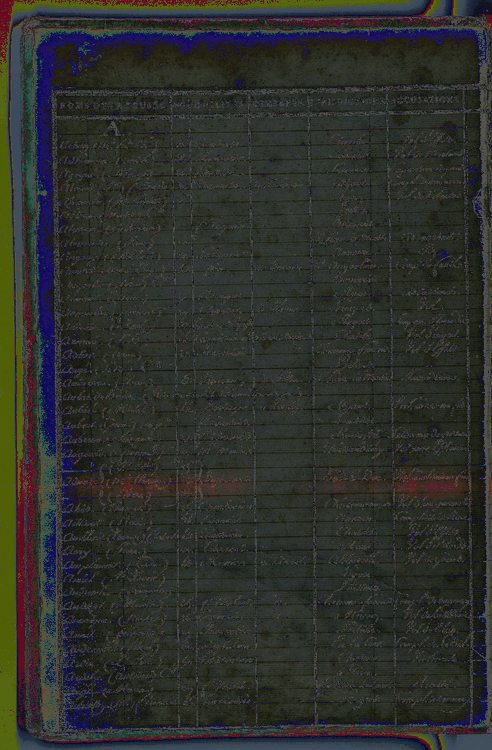

The visualization of the attention map looks strange because the image is normalized.

- This command:

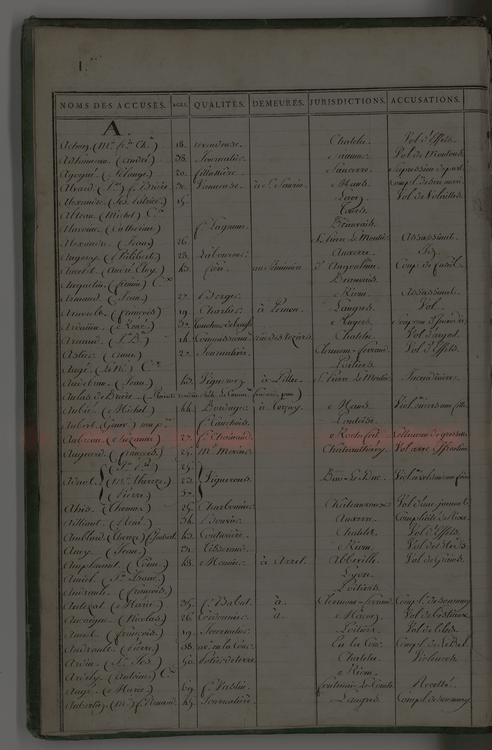

teklia-dan predict --image left.jpg --model dan_simara_x2a_left/checkpoints/best_625.pt --parameters dan_simara_x2a_left/parameters.yml --charset dan_simara_x2a_left/charset.pkl --attention-map --output dan_simara_x2a_left/attention/- Gives the following visualization (right):

| Without normalization (old behavior) | With normalization (current behavior) |

|---|---|

|

|

- We need to change the input tensor in

plot_attentionto a padded, preprocessed, but non-normalized tensor.